During your testing it is likely that you will be holding some confidential information on your system. You need to make sure that your system is secure, otherwise you may learn what it is like to get pwned, the hard way.

However, you do need to strike a balance and be able to communicate with the target system, and allow some ports to be opened, just for services that are going to assist you in compromising that host or network.

Backtrack 4 (like many Linux platforms) has iptables, but some people forget to turn it on, thinking that they are sure to be protected if they don't run any vulnerable services. Take great care.

To ensure some degree of safety, I would recommend:

- Change your root password to something strong (don't forget your default MySQL password also)

- Make sure you don't install any services that automatically start each time you boot.

- If you install any new services (vsftpd for example) prevent them from starting on boot by editing the links in /etc/rc2.d/...

- Only start services when you need them

- Watch what you keep in your FTP share and www root etc.

- Use a firewall, with a restrictive rule-set

- Only open ports when you need them

- (Which is what I am going to discuss here...)

Useful guide to iptables

Here is a good article to show you the basics of iptables configuration.

https://help.ubuntu.com/community/IptablesHowTo#Saving%20iptables

Saving and retrieving firewall rule-sets

iptables rule-sets can be saved and retrieved with the following commands:

Saving

iptables-save > /etc/iptables.rules

Retrieving

iptables-restore < /etc/iptables.rules

Using this technique means that you can pre-build several rule-sets and switch between them easily, or have a file that you edit and reload to add, remove or change rules.

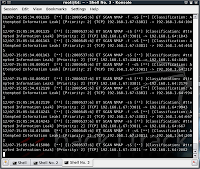

Here is an example which provides a reasonable level of protection. It also contains some useful rules, commented out, which could be quickly added.

# Example iptables firewall rule-set for Backtrack 4

# To use uncomment or add any relevant ports you need and run:

# iptables-restore < /etc/iptables.rules.shieldsup

*filter

:INPUT ACCEPT [0:0]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

# Established connections are allowed

-A INPUT -m state --state RELATED,ESTABLISHED -j ACCEPT

# Connections from your local machine are allowed

-A INPUT -i lo -j ACCEPT

# Uncomment inbound connections to your own services, or create new ones here:

#-A INPUT -i eth0 -p tcp -m tcp --dport 80 -j ACCEPT # HTTP port 80

#-A INPUT -i eth0 -p tcp -m tcp --dport 443 -j ACCEPT # HTTPS port 443

#-A INPUT -i eth0 -p tcp -m tcp --dport 4444 -j ACCEPT # Standard reverse shell port

#-A INPUT -i eth0 -p tcp -m tcp --dport 21 -j ACCEPT # FTP port 21

#-A INPUT -i eth0 -p udp -m udp --dport 69 -j ACCEPT # FTP port 69

# Drop everything else inbound

-A INPUT -j DROP

# Your own client-side-initiated connections are allowed outbound

-A OUTPUT -m state --state NEW,RELATED,ESTABLISHED -j ACCEPT

# Drop everything else outbound

-A OUTPUT -j DROP

COMMIT

# For more info visit www.insidetrust.com

More targeted rules bypass rules for the basic rule-set

In addition you may want to add more targeted rules, for example so that only a single host can access a payload that you have hosted on your web-server.

In that case you may want to add a more specific rule in the following way:

iptables -I INPUT -p tcp -m tcp -s a.b.c.d/32 --dport 80 -j ACCEPT

So for example to add inbound rules so that a target 192.168.1.229 can access your http server and a handler you have listening on port 443, type in the following rules:

iptables -I INPUT -p tcp -m tcp -s 192.168.1.229/32 --dport 80 -j ACCEPT

iptables -I INPUT -p tcp -m tcp -s 192.168.1.229/32 --dport 443 -j ACCEPT

Testing

Don't forget to test your rule-sets are working from an external host before you rely on them in a live situation!

Look after yourself ;o)